Multimodal Feedback Control for Aerial Manipulation

Can we build adaptive drones to perform aerial physical interaction while using visual and tactile feedback?

Yes, and we can showcase their abilities in Aerial Writing tasks.

This project was conducted as a Master Thesis at the Smart Robotics Lab at Imperial College London (ICL). A MAV equipped with a delta arm is used for Aerial Manipulation, showcased as Aerial Writing to visualize the accuracy. A multimodal feedback pipeline is proposed to enable Aerial Writing while adapting to unknown disturbances such as localization drift and calibration errors.

The three main contributions of the thesis are:

- To fix the lack of a standardized evaluation metric for Aerial Writing, an appearance-based visual error metric is proposed to quantify the overall drawing precision of the system.

- Three separate multimodal feedback components are proposed to cope with MAV drift, whiteboard misalignment and model errors in the pen control.

- The proposed approach is evaluated in extensive high fidelity simulations showing drastic improvement of the drawing precision.

When: 2020

What: Master Thesis at Imperial College London

Who: Felix Graule

Supervisors: Dr. Stefan Leutenegger and Prof. Dr. Margarita Chli

Report: Multimodal Feedback Control for Aerial Manipulation

Final Talk: Slide deck

Cross-Spectral SLAM for Soaring Glider UAV

How can we increase the mission time of glider drones and make them fly during the night?

Using thermal vision as part of a cross-spectral SLAM system!

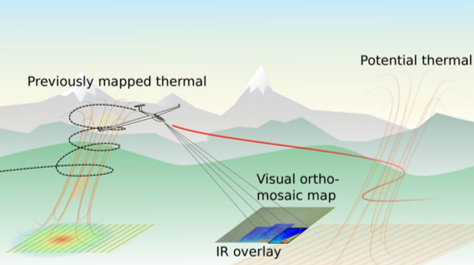

This project was conducted as a Semester Thesis at the Autonomous Systems Laboratory (ASL) at ETH. We propose the use of a thermal map to navigate a glider UAV towards predicted locations of thermal updrafts and autonomously soar in them to gain height.

The main contributions of the thesis are:

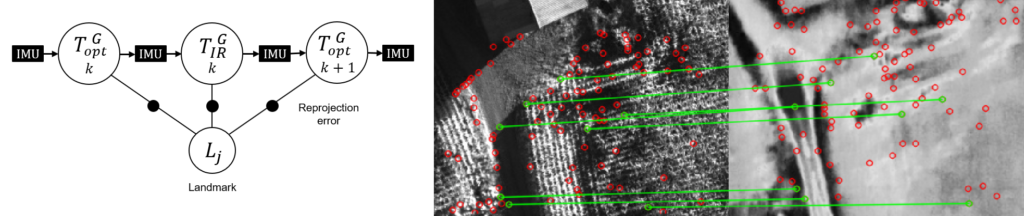

- In order to allow decision making in the optical reference frame of the UAV, we do an in-depth literature review on existing cross-spectral image matching methods.

- We propose jointly optimizing landmarks from both optical and thermal images to arrive at a cross-spectral pose graph.

- We generate the first large-scale training data set for cross-spectral image matching.

- We propose a novel Deep Learning architecture to solve the cross-spectral homography estimation in an end-to-end manner.

When: 2019

What: Semester Thesis at ETH Zürich

Who: Felix Graule

Supervisors: Timo Hinzmann, Dr. Nicholas Lawrance, Florian Achermann, Prof. Dr. Roland Siegwart

Report: Towards Robust Cross-Spectral Optical Thermal SLAM onboard a fixed-wing UAV

Final Talk: Slide deck

Top figure courtesy of Dr. Nicholas Lawrance

Boston Consulting Group

During a three-month internship as a Visiting Associate, I worked on valuation of mobile broadband spectrum for service providers in Canada and Germany.

This allowed me to peek into Management Consulting, which I will keep pursuing as the next step of my career.

When: 2018

What: Internship outside of curriculum

Supervisors: Dr. Lukas Bruderer and Marco Poltera

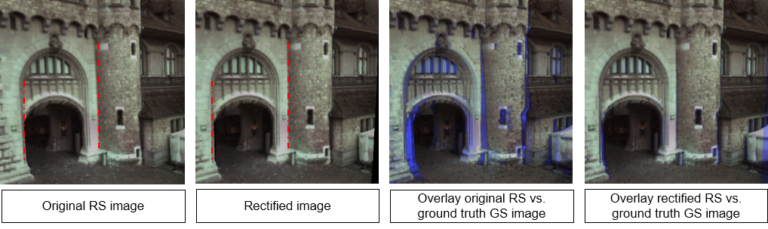

Rolling-shutter Rectification

Have you ever watched smartphone videos taken while on a train and were annoyed by how vertical lines appear slanted in smartphone videos?

Using 3D vision our algorithm can make up for this distortion!

This is a C++ implementation of the work proposed by Zhuang et al.

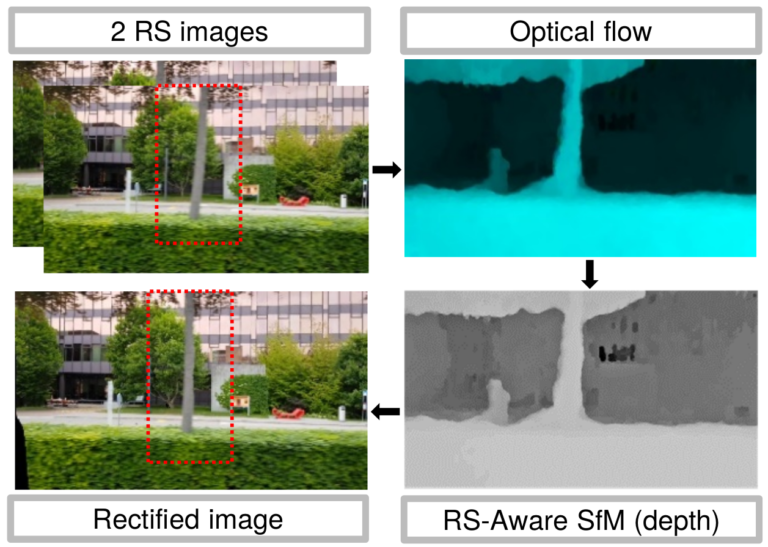

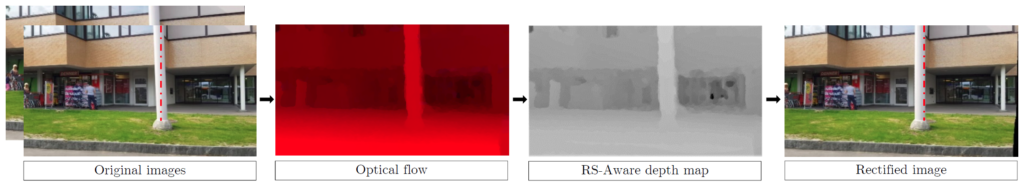

The image above shows an overview of the algorithm.

- We start having two consecutive rolling shutter frames.

- Calculate optical flow using Deep Flow.

- Estimate a RS-aware depth map and relative camera poses from optical flow.

- Using the known poses for each scanline the image, we can reproject into a global shutter image frame for rectification.

When: 2018

What: Course Project at ETH Zürich (3D Vision 252-0579-00L)

Who: Manuel Fritsche, Felix Graule and Thomas Ziegler

Supervisor: Dr. Oliver Saurer

Report: Rolling-Shutter Aware Differential Structure from Motion and Image Rectification

Supercomputing Systems

During a six-month internship, I helped build a single frequency cellular communication network used by emergency services in Austria.

Being part of a team with three Senior Software Engineers, I worked on a communication framework used as an alerting system for emergency services and contributed to back-end in C++ and Python as well as to the web front-end in JavaScript. During this time, I worked independently using Git and Jira.

This internship allowed me to gather experience on how to develop production-level code and deepened my coding skills and understanding of real-time systems.

When: 2017

What: Industrial Internship as part of Master’s

Supervisors: Christof Sidler and Samuel Zahnd

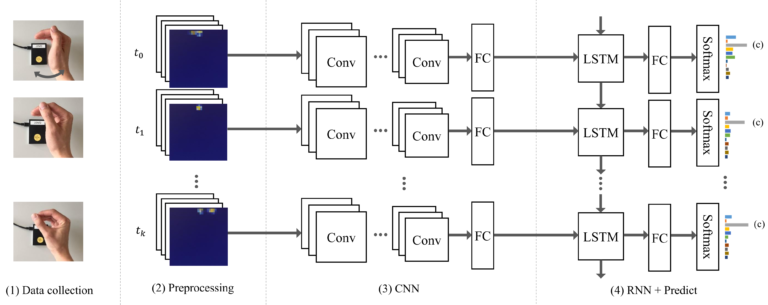

Google Soli

Radar cannot only be used to detect airplanes, but also for gesture recognition as in the Google Soli.

This project allowed me to work with Deep Learning for the first time!

The goal of this project was to gather first experiences in the field of artificial intelligence, gain an in-depth understanding of the deep learning gesture recognition pipeline using the Google Soli sensor proposed by S. Wang and J. Song and eventually improve its performance.

While working with the Institute for Media Innovation (IMI) lead by Prof. Nadia Thalmann I also got to talk to their humanoid robot Nadine. See for yourself in the video below.

When: 2017

Who: Felix Graule

Supervisor: Prof. Dr. Junsong Yuan

What: Research Project at NTU Singapore

Report: Exploring Gesture Recognition using Deep Learning based on Google Soli

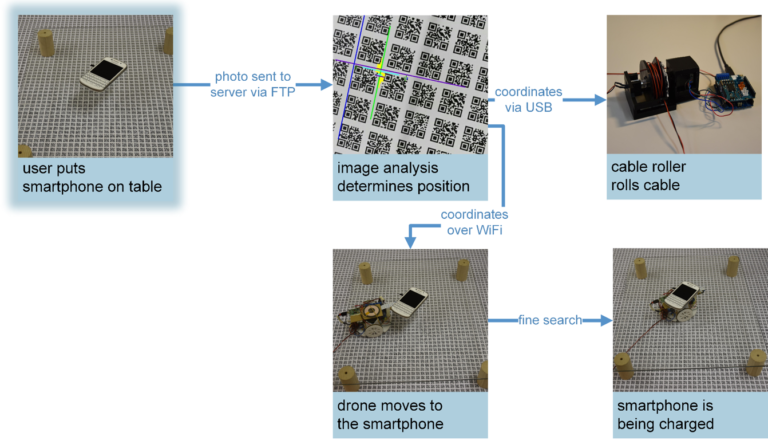

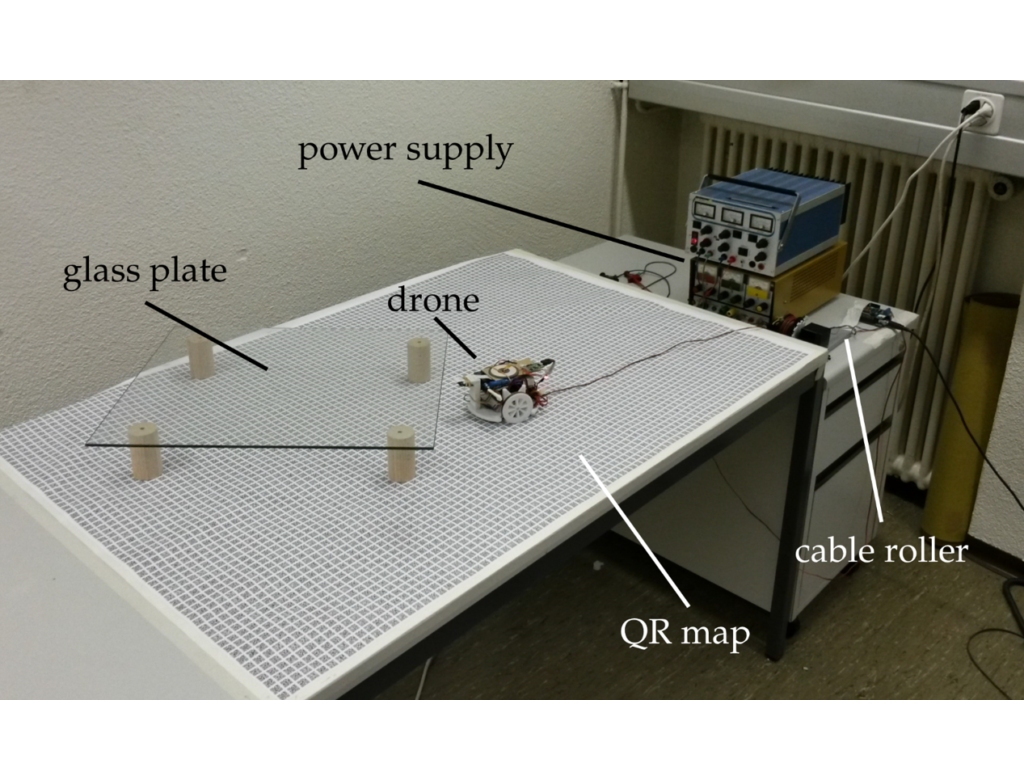

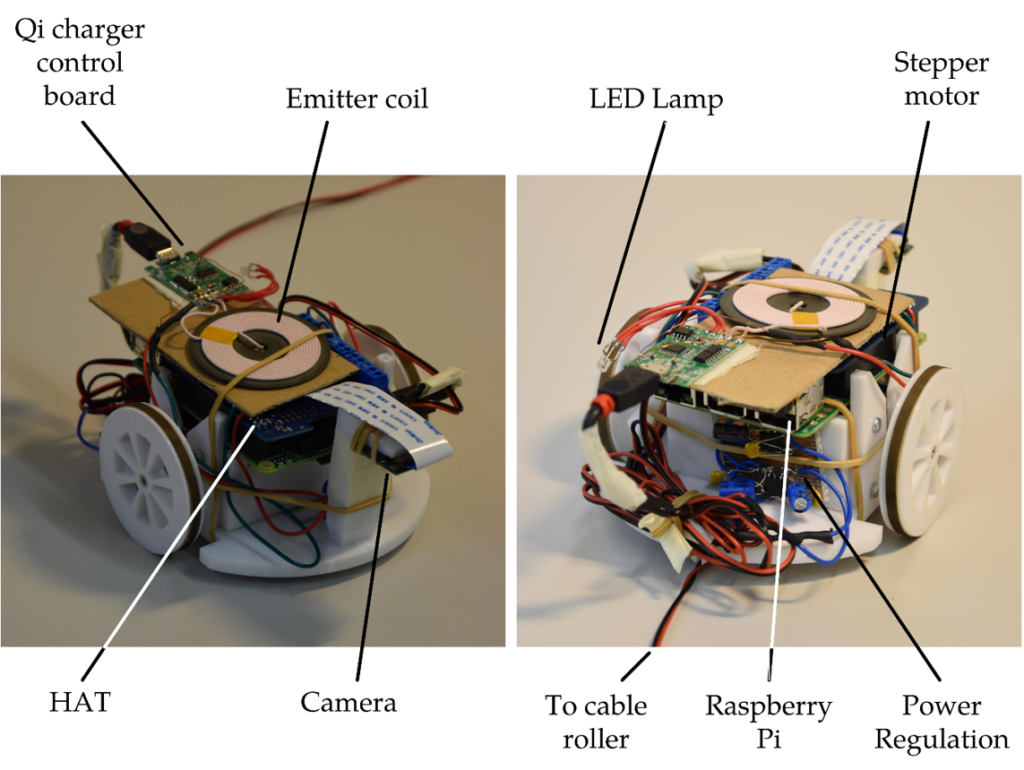

InducTable

Charging your phone means fighting over one of the few power outlets at the library.

We came up with a solution to end this!

To charge your phone wirelessly using the popular Qi standard, users have been required to align their devices exactly with the charing coil. We wanted to remove this restriction and built a desk that automatically starts charging your phone once you lay it down.

When: 2016

What: Group Project at ETH Zürich

Who: Raphaela Eberli, Nicolas Früh and Felix Graule

Supervisors: Prof. Helmut Bölcskei and Michael Lerjen

Report: InducTable – A Table for Position-Independent Wireless Charging

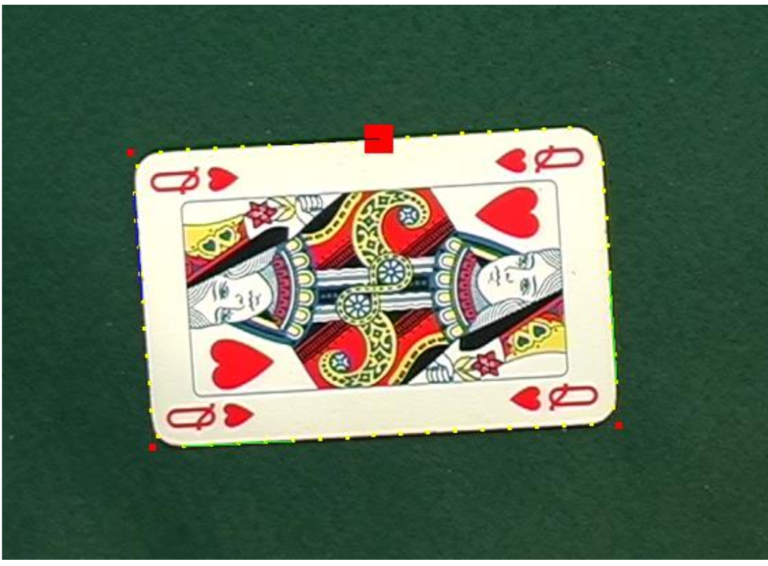

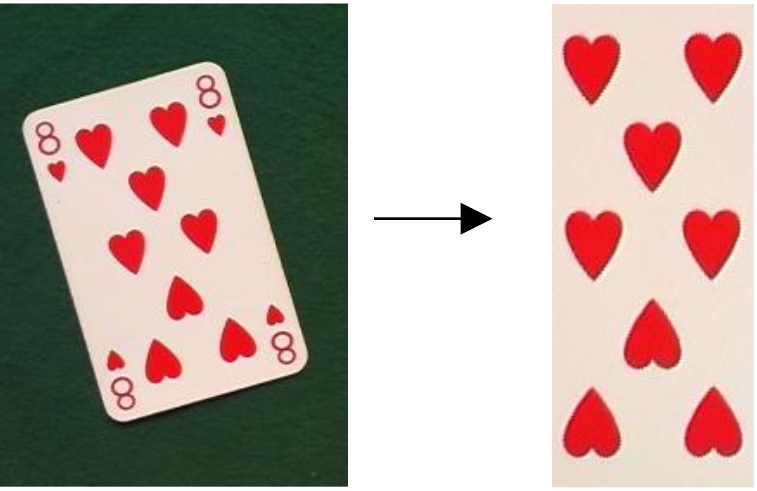

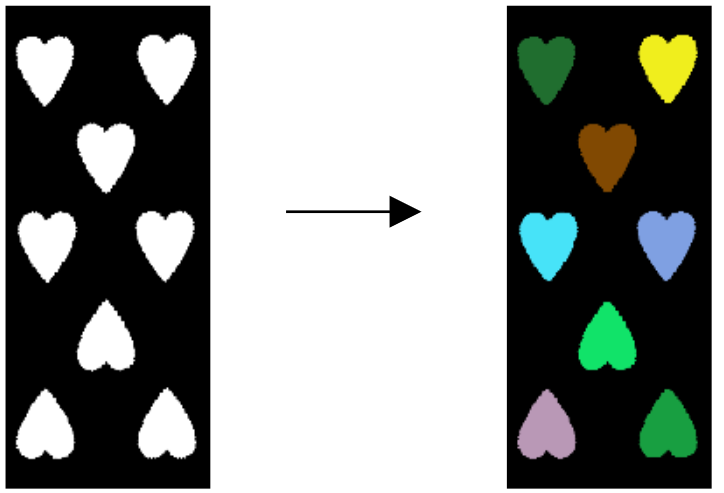

Vision goes Vegas

Playing Blackjack has never been this easy and fun.

Our software bot plays autonomously using Computer Vision to detect the cards!

When: 2015

What: P&S project at ETH Zürich

Who: Nicolas Früh and Felix Graule

Supervisor: Dr. Andras Bodis-Szomoru

Report: Vision goes Vegas

Code: Repository

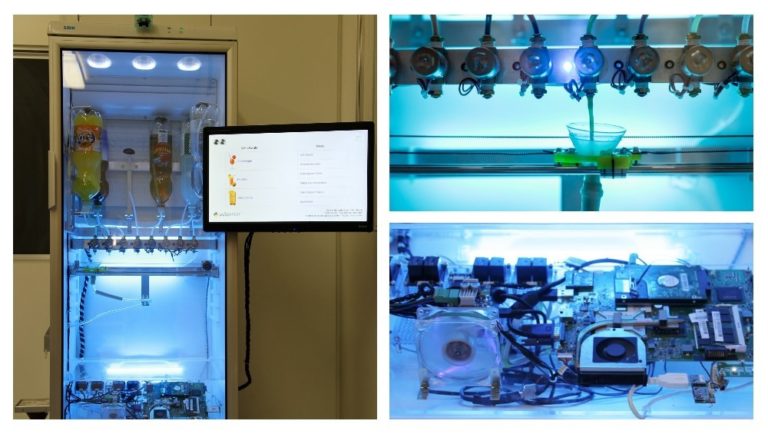

Cocktail Robot

Mixing cocktails has never been this easy!

Just select your favourite drink and let the robot do the rest.

pySpenser can mix 8 different ingredients to create a wide range of flavours. All ingredients are cooled continuously to extend storage life and to improve taste. The customer can order drinks using the intuitive touch interface. Regular customers can use the integrated fingerprint sensor to easily order their usual drink. Further highlights of the robot are:

- Precise dosing mechanism based on magnetic valves and Toricelli’s law

- Transparent design to visualize mixing process

- Glass and environment surveillance

Tinkerforge and Arduino boards are used to control the actors and readout the sensors. The software was programmed with Python and C.

When: 2014

What: Final Year Thesis in High School

Who: Felix Graule

Report: Development of a Cocktail Robot (only in German)

The project was awarded, sponsored or mentioned by the following institutions/companies: